OpenAI announced the GPT-3 Model in 2020, but didn’t release it to the public due to the risks involved with making it public. After implementing a filter on top of the model and with the improved version, the company released it to Beta on June 11, 2022, which was made available to the public in late 2022. The company also has GPT-2, which was announced back in Feb 14, 2019, and has been trained on 40GB of research papers, meaning over 1.7B parameters. Additionally, the first GPT Model was announced on June 11, 2018, and was trained on 700 unpublished books.

This is still one of the most advanced Generative AI models available to the public for free. It’s called GPT-3 and is trained on a large database that includes textual sources such as Wikipedia, Reddit, books, and a range of other data. The database contains over 45TB of text data, which translates to 175B parameters. GPT-3 uses advanced natural language conversation, which allows it to respond in natural language. To generate content and handle this kind of data, OpenAI uses Microsoft’s Azure Supercomputer, which has 285,000 CPUs and 10,000 Nvidia GPUs.

Also read: How To Use ChatGPT 4 For Free?

How the GPT-3 Model Works

GPT stands for Generative Pre-Trained Transformer version 3. With the launch of GPT-3, generative AI has become accessible to general users and has entered the mainstream despite being a pioneer in the industry. The company has used a massive dataset to train their AI. By matching your prompt with the dataset, GPT-3 generates a response in a conversational way. If you want to compare GPT-3 with the previous model, GPT-2, you can check out Hugging Face’s GPT-2 and see for yourself how much GPT-3 has really improved.

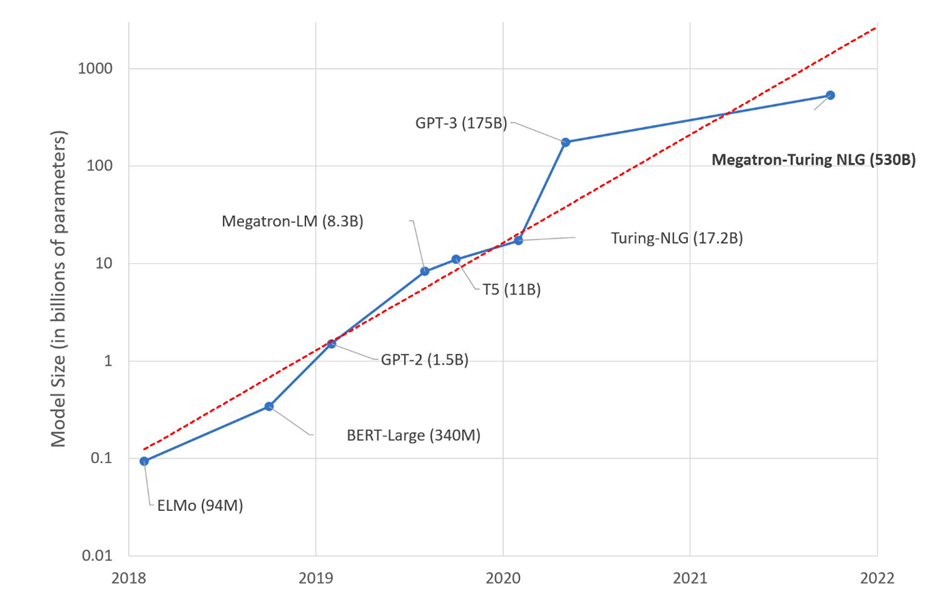

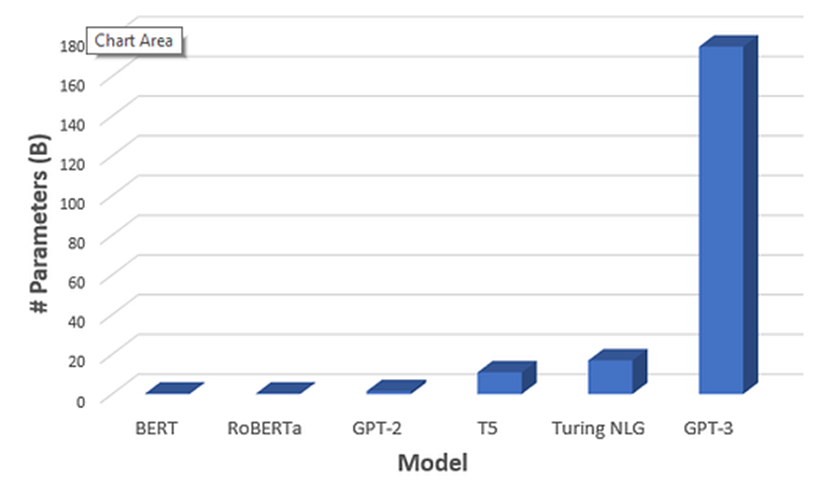

Courtesy: Microsoft

It calculates the likelihood of one word appearing in the text given the other words, also known as the probability of words. It is a statistical program that has created a neural network, although there are several technologies and algorithms. In the real world, many developers and businesses leverage GPT-3 technology for productivity or to expand their app and service functionality. Apart from text generation, the company has also integrated Codex to help write code with minimal plain English instructions.

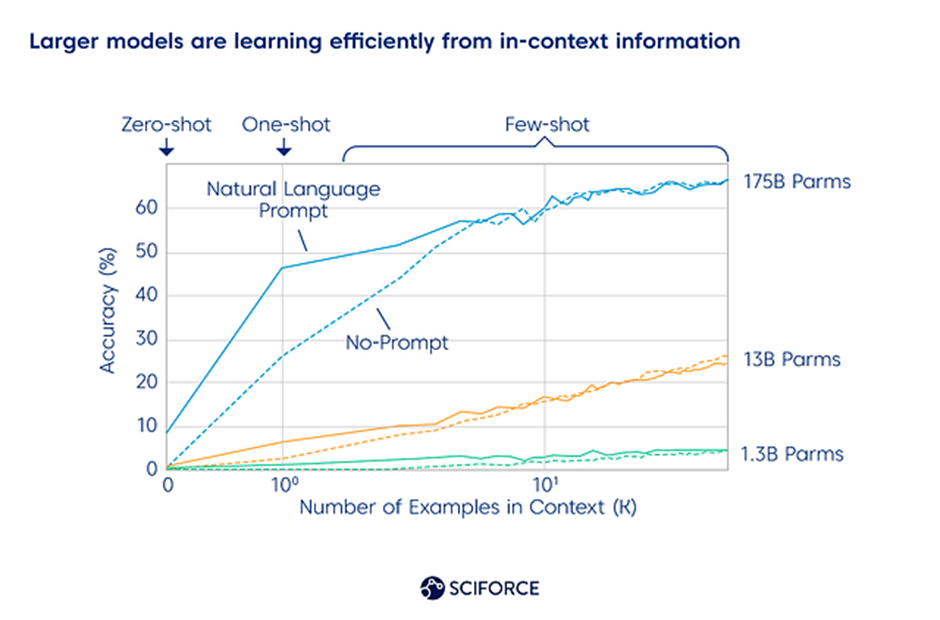

Courtesy: Sciforce

Unlike GPT-2, it is not open-source but can be accessed through an API. Developers can use GPT-3 to create NLP features in their applications without developing their algorithm or model, which helps them reduce costs and leverage existing technology. More than 300 applications are already powered by GPT-3, which is still growing.

OpenAI’s GPT-3 Capabilities and Technology

After successful trials and testing of their NLP, the company moved to GPT-3 and has already released a successor, GPT-4. The company may be already working on upgrading GPT-4 with the release of GPT 4.5 anytime soon, but it might get delayed due to regulations. The company has used several technologies, including…

Courtesy: TowerDataScience

- NLP Task: This helps GPT-4 to respond in a conversational way, alongside other features like text classification.

- Language Model: The company has made GPT-2, which is capable of predicting the next word. For this, the company includes features that help users with auto-complete.

- Zero/One/Few Shot Learning: This model learns from the task by seeing zero/one/few examples for the prompt, resulting in better responses.

- Transfer Learning: With deep learning of complex things, such as leveraging pre-existing knowledge like MRI scans, the company’s AI has vision capabilities, as we have already seen in DALL-E.

- Transformer Mode: The company primarily uses the NLP architecture, and similar to other generative AGL modes, it uses deep learning.

| Datasets | Quantity (Tokens) | Weight in Training Mix | Epochs elapsed when training for 300 BN tokens |

| Common Crawl (filtered) | 410,000,000 | 22% | 0.44 |

| WebText2 | 19,000,000 | 2.90 | |

| Books1 | 12,000,000 | 8% | 1.90 |

| Books2 | 55,000,000 | 8% | 0.43 |

| Wikipedia | 3,000,000 | 3% | 3.40 |

The company has created several products based on GPT-3, but some notable products where you can take advantage of using GPT-3 include:

- ChatGPT: This is designed for everyone. Anyone can conversationally use the GPT-3 model by signing in to ChatGPT. It’s free and available on mobile devices, but it’s currently only available on iOS devices. The company has announced that the app for Android will be coming later this year.

- OpenAI Playground: This focuses on the developer end as it provides a developer environment to test the GPT-3 model without deploying it to their local device or server.

Also read: How To Use OpenAI API For Free?

Types of GPT-3 Models

The company has yet to enable every feature on ChatGPT or Playground. Instead, some features are only available via API. With different sizes of parameters and layers, architecture, hyper-parameters, batch size in tokens, and learning rate, the range of GPT-3 models is from 125M to 175 BN parameters. These are some of the types of GPT-3 models.

Types of GPT-3 Model

| Parameters | Layers | Hidden Size | Attention Heads | Batch Size | Learning Rate | Series |

|---|---|---|---|---|---|---|

| 125M | 12 | 768 | 12 | 0.5M | 6.0 x 10^-4 | Base GPT-3 |

| 350M | 24 | 1024 | 16 | 0.5M | 3.0 x 10^-4 | Base GPT-3 |

| 760M | 24 | 1536 | 16 | 0.5M | 2.5 x 10^-4 | Base GPT-3 |

| 1.3B | 24 | 2048 | 24 | 1M | 2.0 x 10^-3 | Base GPT-3 |

| 2.7B | 32 | 2560 | 32 | 1M | 1.6 x 10^-4 | Base GPT-3 |

| 6.7B | 32 | 4096 | 32 | 2M | 1.2 x 10^-4 | Base GPT-3 |

| 13B | 40 | 5140 | 40 | 2M | 1.0 x 10^-4 | Base GPT-3 |

| 175B | 96 | 12288 | 96 | 3.2M | 0.6 x 10^-4 | GV-R3 |

| 350M | 12 | 768 | 12 | 0.5M | 6.0 x 10^-4 | InstructGPT-3 |

| 1.3B | 24 | 1024 | 16 | 0.5M | 3.0 x 10^-4 | InstructGPT-3 |

| 6.7B | 24 | 1536 | 16 | 0.5M | 2.5 x 10^-4 | InstructGPT-3 |

| 175B | 24 | 2048 | 24 | 1M | 2.0 x 10^-3 | InstructGPT-3 |

| 350M | 12 | 768 | 12 | 0.5M | 6.0 x 10^-4 | InstructGPT-3 |

| 1.3B | 24 | 1024 | 16 | 0.5M | 3.0 x 10^-4 | InstructGPT-3 |

| 6.7B | 24 | 1536 | 16 | 0.5M | 2.5 x 10^-4 | InstructGPT-3 |

| 175B | 24 | 2048 | 24 | 1M | 2.0 x 10^-3 | InstructGPT-3 |

| 175B | 96 | 12288 | 96 | 3.2M | 0.6 x 10^-4 | GPT-3.5 |

| 175B | 96 | 12288 | 96 | 3.2M | 0.6 x 10^-4 | GPT-3.5 |

| 175B | 96 | 12288 | 96 | 3.2M | 0.6 x 10^-4 |

When it comes to GPT-3 model, There are four primary OpenAI models which have different strengths and capabilities, and models include the Davinci, Curie, Babbage, and Ada.

Features of GPT-3 Model

Some potential uses include searching and exploring extensive knowledge by asking complex natural-language questions and understanding conversationally. You can use this in multiple languages, including English, Chinese, Japanese, Korean, Hindi, German, French, Russian, and Spanish.

Another use is that you can ask for advice and recommendations and generate content for YouTube, blogs, or other things. However, users should be aware of some downsides to using the GPT-3 model, such as its potential inaccuracy.

- App design and coding

- Conversational agents

- Text-related tasks

- Language Translation

- Named entity recognition

- News article generation

- Content comprehension

- Personalized language model

- Programming assistance

- Question-answer systems

- Reading comprehension

- Sentiment extraction

- Text classification

- Text completion, etc.

To learn more, you can join the Academic Access Application, designed by OpenAI as a particular program for academic researchers who want to take advantage of GPT-3 for academic research.

Limitations of GPT-3

Due to these technologies, we have recently seen a growth spike in fake news because of the content being generated by AI. Several things include short stories, dialogues, lyrics, image generation, and more. It’s not always perfect, as it lacks accurate intelligence. Also, by submitting your content, you are risking your privacy and confidential data. Sometimes, you may find GPT-3 a bit biased, racist, and sexist, devoid of common sense and real-world sensibility as it depends on the dataset.

It has some limitations. It cannot learn anything from human interaction and lacks long-term memory. Also, it can’t interpret complex responses, which may affect the response of large and complex responses in generations. On top of that, it only allows users to submit a fixed number of characters in the prompt, which means anything longer than a few sentences may not be accepted or unable to generate responses. On top of that, there is an ethical consideration when it comes to the usage of GPT-3. Adaptation of GPT-3 grows, and it becomes crucial to uphold ethical standards and consider the impact of the use of technology.

Usage of OpenAI GPT-3

Again, it uses NLP to analyze and understand a human text to generate a response. However, the text response is quite helpful for getting real-time communication, responding to prompts, and filling in the blanks. Since it has been trained on vast datasets, you can also use it for deep learning and adapt to a wide range of tasks besides text generation.

With GPT-3, you can generate text up to 50,000 characters and be used to generate creative content. We advise users to keep experimenting with the prompt and learn some prompt engineering since the better your prompt, the well it can generate a response. You can learn how to generate and how you should use a prompt to get better results from the OpenAI Prompt library.

Also read: How To Use ChatGPT 4 For Free?

Fine-tune GPT-3

This is for advanced users who want to use more GPT-3 capabilities; the basic GPT-3 model on ChatGPT is enough for regular users. Users can also adjust OpenAI fine-tuning for OpenAI, which was pre-trained for a specific task like machine translation, responding to answers, or any other language-based task. You can adjust the model to perform specific tasks better and adapt the model to the task’s patterns and rules, resulting in more accurate and relevant outputs.

- Accuracy: It will improve the performance and give much better accuracy than regular GPT-3 model results.

- Less prone to overfitting: It is more robust, which means it is less prone to overfitting than a non-fine-tuned model. This is more useful when dealing with limited data.

- Better generalization: It has a better generalization to new data, especially for complex tasks or datasets.

- Increased interpretability: It has improved modes interpretability, which makes it easier to understand how it works and what the model has learned.

Before proceeding, you need to note that it costs 50% more than the regular, and the current fine-tuning rate of GPT-3 models is based on specific tuning but similar to the rates of model usage. With this, you can prepare the training dataset to train a new fine-tuned model.